Meet 👋 Sahara,

Best-in-class speech recognition

and text-to-speech models

for African accent

Beats OpenAI, Google, AWS,

Azure across multiple benchmarks

Meet Sahara

Trusted by Startups

and Enterprises

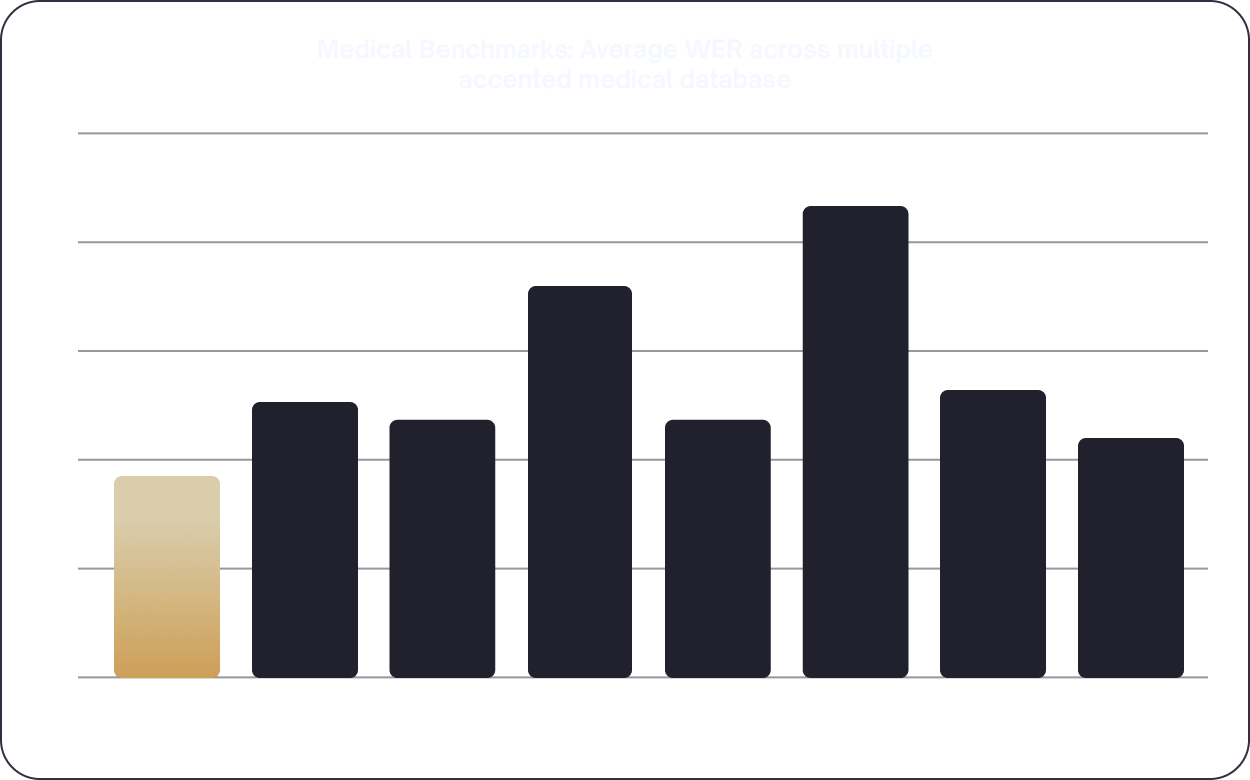

Medical

Here’s the kicker: we’re a tiny, seed-stage startup. We don’t have the luxury of bottomless compute or internet-scale data. So we had to do things differently — leaner, smarter, and relentlessly focused on real-world performance.

Mark White

Ceo of Company

Medical

Here’s the kicker: we’re a tiny, seed-stage startup. We don’t have the luxury of bottomless compute or internet-scale data. So we had to do things differently — leaner, smarter, and relentlessly focused on real-world performance.

Mark White

Ceo of Company

Medical

Here’s the kicker: we’re a tiny, seed-stage startup. We don’t have the luxury of bottomless compute or internet-scale data. So we had to do things differently — leaner, smarter, and relentlessly focused on real-world performance.

Mark White

Ceo of Company

Medical

Here’s the kicker: we’re a tiny, seed-stage startup. We don’t have the luxury of bottomless compute or internet-scale data. So we had to do things differently — leaner, smarter, and relentlessly focused on real-world performance.

Mark White

Ceo of Company

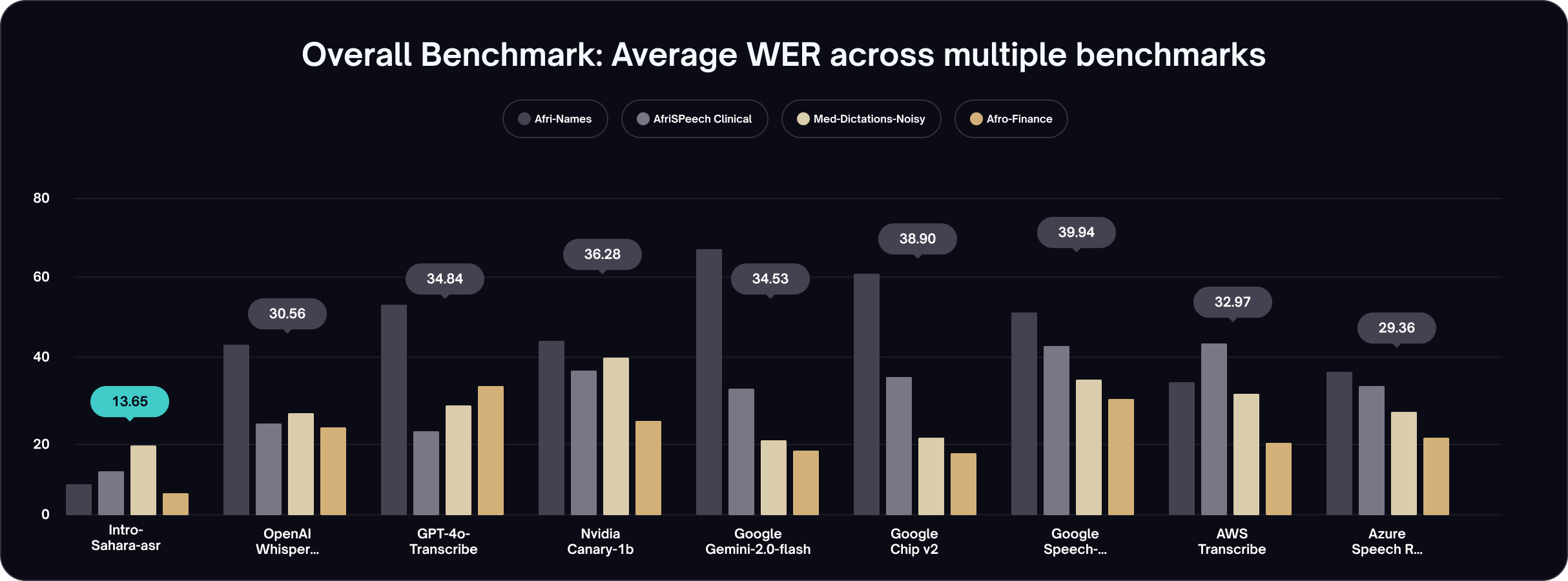

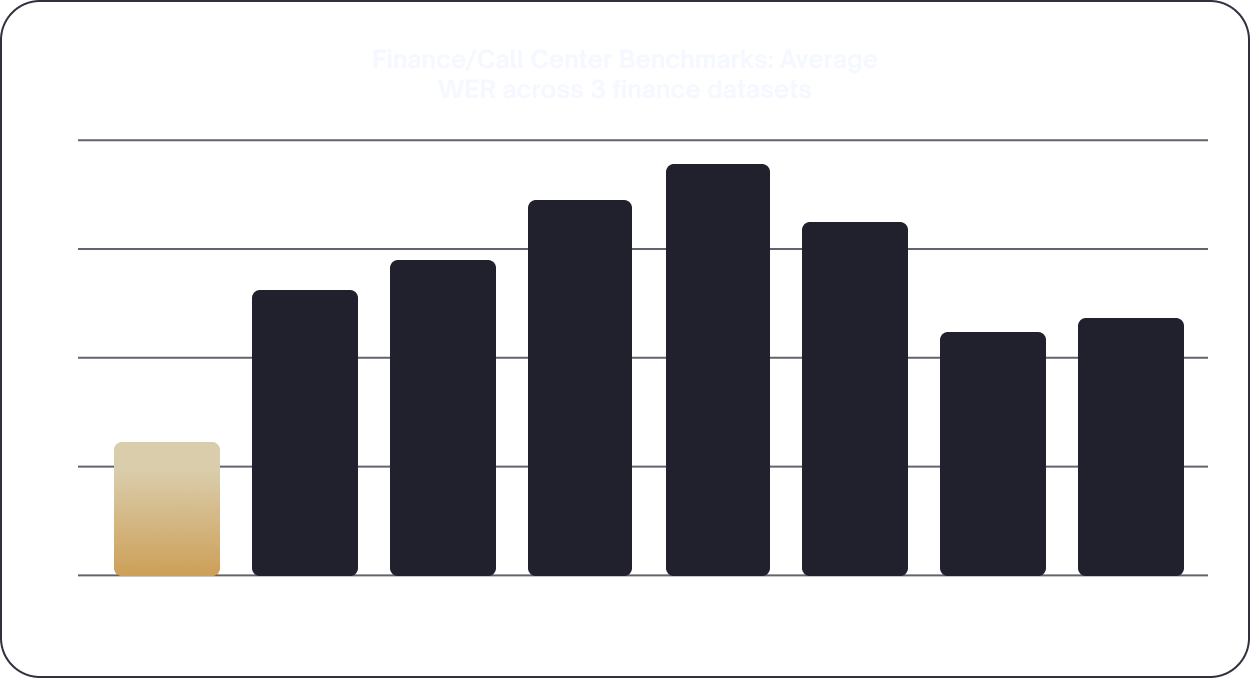

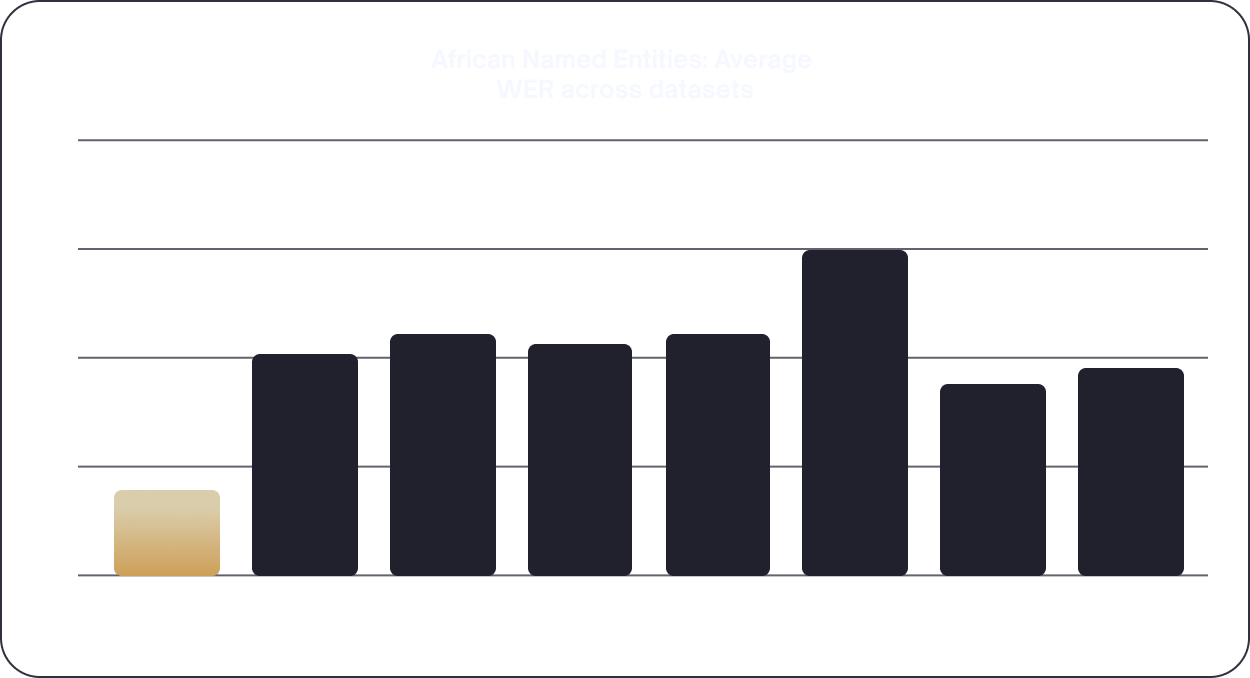

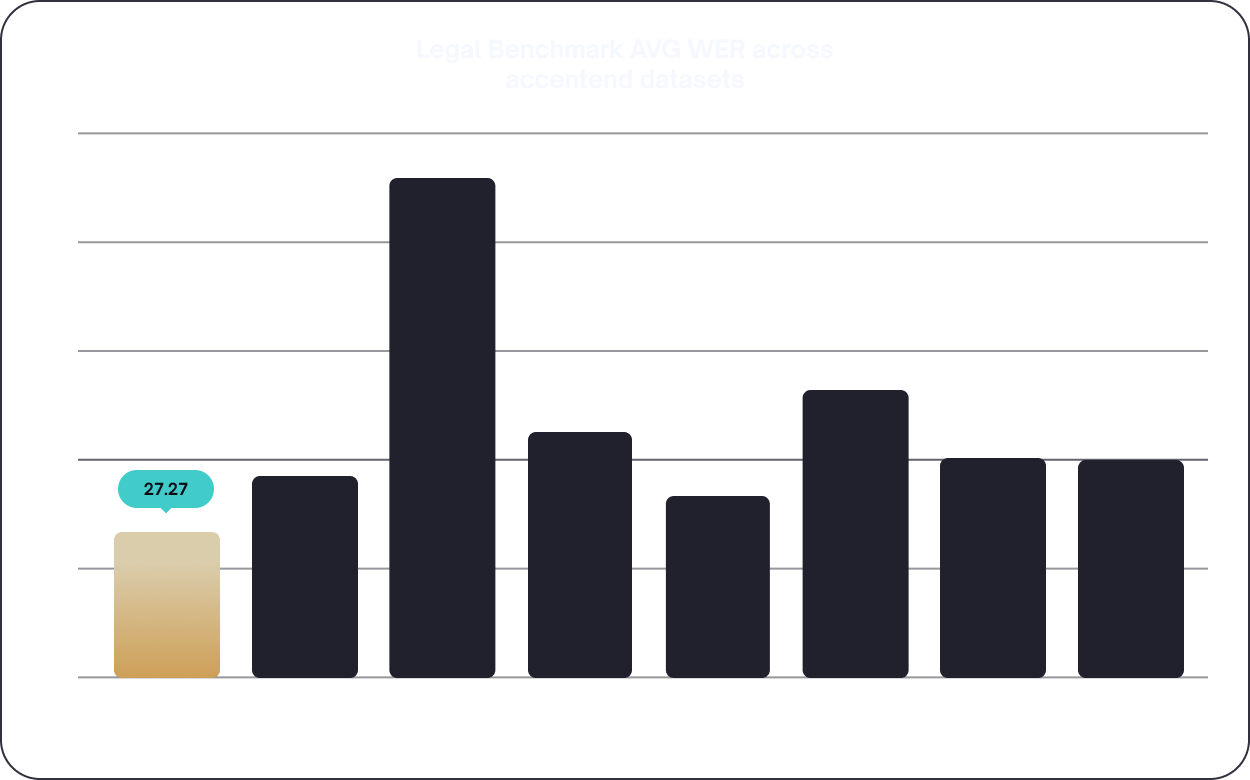

Benchmarks

Metric: Word Error Rate (WER), lower is better

Word Error Rate (WER) is a common way to measure how accurate speech recognition systems are. It compares what the system heard to what was actually said. It measures the model’s ERROR, so lower is better. It divides the number of word-level errors by the total number of words

A Quick Primer on Word Error Rate (WER)

What is WER?

Word Error Rate (WER) is a common way to measure how accurate speech recognition systems are. It compares what the system heard to what was actually said. It measures the model’s ERROR, so lower is better. It divides the number of word-level errors by the total number of words

Why it matters: WER tells us how reliable a speech-to-text system is. A lower WER means fewer mistakes and better performance—critical for areas like healthcare, legal, and customer service.

Strengths: Simple to calculate, Easy to compare different systems, Works across languages

Weaknesses: Treats all errors equally—even if some are more harmful (e.g., “don’t take” vs “take”); Even single character errors like carrot vs carot get full penalty, so it can be overly harsh and punitive; Doesn’t consider punctuation or context; May not reflect user satisfaction or usefulness

What is WER?

WER = (Substitutions +

Insertions + Deletions) ÷ Total Words

Substitutions: wrong words

Insertions: extra words

Deletions: missing words

Spoken: “Take your medicine daily”

Transcript: “Take your message daily”

WER = 1 error ÷ 4 words = 25%

Punching way above models 2-3x its size, Sahara demonstrates superior performance on Accented English speech in a pan-African context across multiple industries (health, finance, legal, academia, etc) and domains with impressive robustness to background noise, intonations, and domain-specific vocabulary.

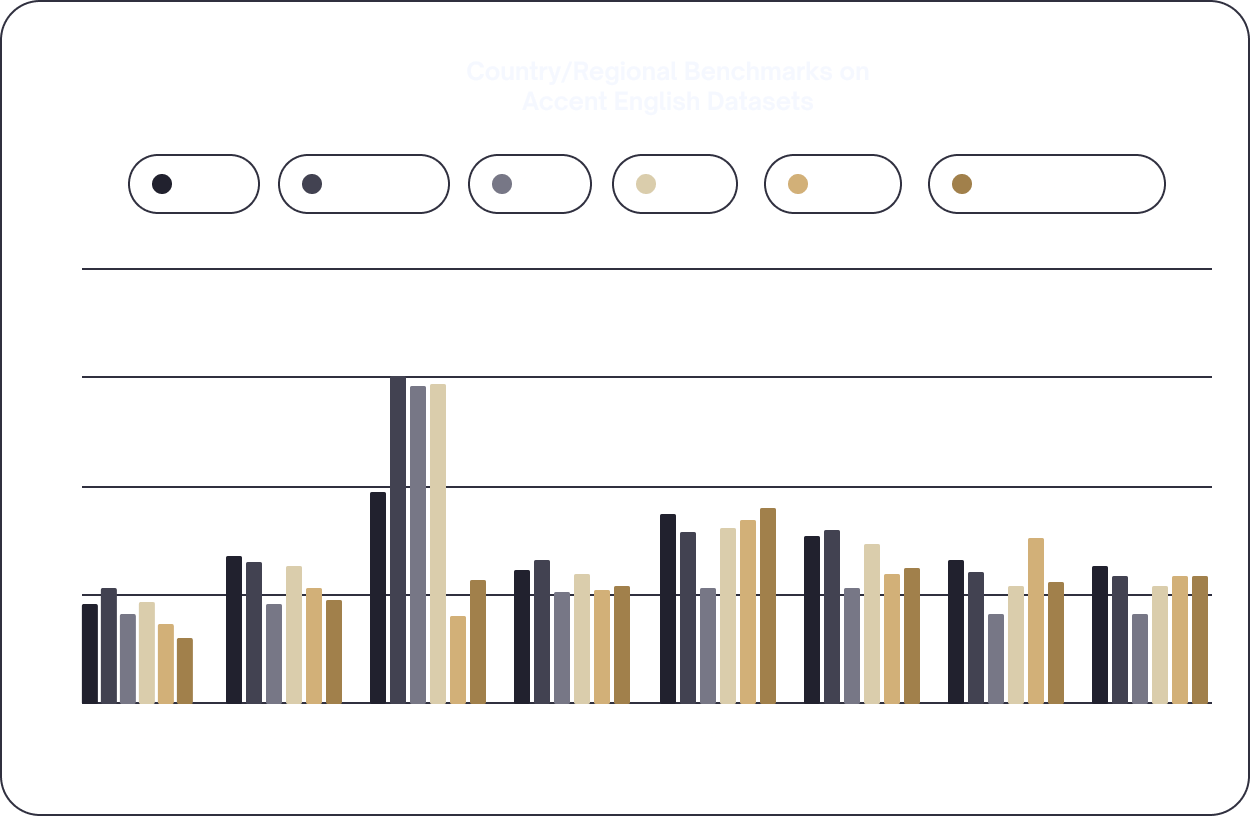

Domains

Medical

Call Center

Named Entities

Legal & Parliamentary

Country accent